Camera Tools

The camera toolbox.

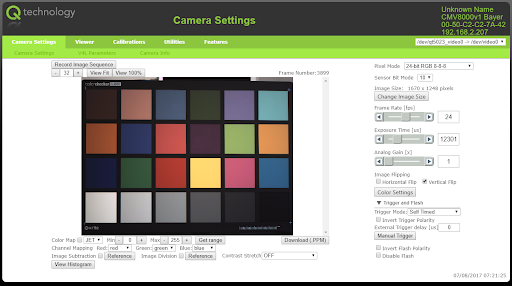

Camera Web UI

Allows easy access to the image stream and configuration of the cameras through a standard web browser, without installation of any special programs or drivers.

Point browser to http://<camera_ip> to open the web interface.

It presents a live preview (JPEG) and allows downloading images in the following formats: PPM, JPEG, TIFF, RAW.

Control all the camera features: like image formats, image size, regions of interest (cropping), frame rate, exposure time, triggers, gain, etc. All these features are controlled through the V4L2 API controls, which are also available controls from user applications.

Other special features built into the web interface are: auto white balancing, focus adjustment measures, histograms, sensor calibration, etc.

All configurations can be saved and restored for use in user applications or for setting up other cameras (XML).

The camera web interface runs only over http and not https.

If you have issues accessing the web interface try verifying that your browsers hasn't redirected to https instead of http.

Standard Linux tools

V4L2 Controls (v4l2-ctl) 1

An application to control video4linux drivers.

List video devices

v4l2-ctl --list-devices

Get all details of a video device

v4l2-ctl -d /dev/qtec/video0 --all

Show driver info

VIDIOC_QUERYCAP

v4l2-ctl -d /dev/qtec/video0 --info

Capture

VIDIOC_STREAMON

v4l2-ctl -d /dev/qtec/video0 \

--stream-mmap=8 --stream-count=0 --verbose --stream-to=file.raw

w=1664; h=34; t=615; l=64; f=RGB3; p=500; \

v4l2-ctl -d /dev/qtec/video0 \

--set-fmt-video width=${w},height=${h},pixelformat=${f} \

--set-crop top=${t},left=${l},width=${w},height=${h} \

--set-parm ${p} \

--stream-mmap=8 --stream-count=0 \

--verbose \

--set-ctrl exposure_time_absolute=300 \

--set-ctrl sensor_bit_mode=1

Video Format

VIDIOC_G_FMT

v4l2-ctl -d /dev/qtec/video0 --get-fmt-video

VIDIOC_S_FMT

v4l2-ctl -d /dev/qtec/video0 \

--set-fmt-video width=<IMG_WIDTH>,height=<IMG_HEIGHT>,pixelformat=<PIXEL_FORMAT_FOURCC>

VIDIOC_ENUM_FMT

v4l2-ctl -d /dev/qtec/video0 --list-formats-ext

Crop

VIDIOC_S_SELECTION

v4l2-ctl -d /dev/qtec/video0 \

--set-crop top=<CROP_TOP>,left=<CROP_LEFT>,width=<IMG_WIDTH>,height=<IMG_HEIGHT>

FPS

VIDIOC_S_PARM

v4l2-ctl -d /dev/qtec/video0 --set-parm <fps>

VIDIOC_G_PARM

v4l2-ctl -d /dev/qtec/video0 --get-parm

VIDIOC_ENUM_FRAMEINTERVALS

v4l2-ctl -d /dev/qtec/video0 --list-frameintervals width=<IMG_WIDTH>,height=<IMG_HEIGHT>,pixelformat=<PIXEL_FORMAT_FOURCC>

Controls

VIDIOC_QUERYCTRL

v4l2-ctl -d /dev/qtec/video0 --list-ctrls

VIDIOC_QUERYMENU

v4l2-ctl -d /dev/qtec/video0 --list-ctrls-menus

VIDIOC_G_EXT_CTRLS

v4l2-ctl -d /dev/qtec/video0 --get-ctrl <control_name>

VIDIOC_S_EXT_CTRLS

v4l2-ctl -d /dev/qtec/video0 --set-ctrl <control_name>=<control_value>

Yet Another V4L2 Test Application (yavta) 2

Options:

--capture=<NR_BUFFERS>--file=<OUTPUT_FILE_NAME>--set-control ‘<CTRL_NAME> <VALUE>’--format <FORMAT>

Capture infinite buffers

yavta /dev/qtec/video0 --capture

Capture 10 buffers

yavta /dev/qtec/video0 --capture=10

Request 10 buffers (VIDIOC_REQBUFS)

yavta /dev/qtec/video0 --capture -n 10

Qt V4L2 Control Panel Application (qv4l2) 3

Requires X11 forwarding (ssh -X) or screen connected directly to the camera.

qv4l2 -d /dev/qtec/video0

Reading/Writing Directly to the Device

Read (adjust bs to w*h*chs):

dd if=/dev/qtec/video0 of=frame.raw bs=452608 count=1

Write (adjust bs to w*h*chs):

dd if=xform_1664x34_cam2.dat.corrected.center of=/dev/qtec/xform_dist0 bs=452608

Streaming RAW Frames

1. Receive

Start listening on remote machine (adjust frame size, format and fps).

Note: Requires mplayer to be installed (apt-get install mplayer).

netcat -l <dest_port> | mplayer -demuxer rawvideo \

-rawvideo w=1664:h=17:format=rgb24 -fps 2 -

Otherwise the stream can fx be saved to a file.

netcat -l <port> > stream.raw

2. Stream

Then start streaming from camera

cat /dev/qtec/video0 | nc <dest_ip> <port>

It is also possible to stream using v4l2-ctl:

v4l2-ctl -d /dev/qtec/video0 --stream-mmap --stream-to=- | nc -p <dest_ip> <port>

Streaming server

Requires installing the full netcat (apt-get install netcat) on the camera

(The default netcat is the lightweight version from busybox which doesn't include listening capabilities).

Note: this is being changed, so in the future the full netcat will be installed by default.

1. Stream

Start server on camera listening for connections

cat /dev/qtec/video0 | netcat -l -p <port>

It is also possible to stream using v4l2-ctl:

v4l2-ctl -d /dev/qtec/video0 --stream-mmap --stream-to=- | nc -l -p <port>

2. Receive

Receive stream on client PC using VLC (adjust frame size, format and fps).

vlc --demux rawvideo --rawvid-chroma RV24 --rawvid-fps 24 --rawvid-width 1024 --rawvid-height 1024 tcp://10.10.150.227:9988

Possible --rawvid-chroma: RV24(RGB), GREY, I420, ...

https://wiki.videolan.org/Chroma/

Receiving with python:

TCP_IP = "10.100.10.100" # Update to reflect camera IP

TCP_PORT = 5000 # Update to reflect used port

BUFFER_SIZE = 1024

# Update to reflect camera format

FRAME_WIDTH = 1024

FRAME_HEIGHT = 1024

N_CHANNELS = 3 # RGB

N_BYTES = 1 # 8-bit

FRAME_SIZE = FRAME_WIDTH * FRAME_HEIGHT * N_BYTES * N_CHANNELS

sock = socket.socket(socket.AF_INET, # Internet

socket.SOCK_STREAM) # TCP

print("connecting")

try:

sock.connect((TCP_IP, TCP_PORT))

except ConnectionRefusedError:

print("Could not connect to camera")

n_imgs=0

while 1:

data = bytearray()

while len(data) < FRAME_SIZE:

try:

msg = sock.recv(FRAME_SIZE - len(data))

data.extend(msg)

except:

pass

n_imgs = n_imgs + 1

print("Got frame: " + str(n_imgs))

qtec tools

Tools/libraries created by qtec to help with camera configuration/interaction.

quark-ctl 4

A command line tool for interacting with qtec cameras.

It exposes the functionaly from quarklib on the cmd line.

And it also contains a few extra features like saving PNM images

and measuring image statistics (min/max/avg intensities, ...) and histogram.

quark-ctl --help

Usage: quark-ctl [OPTIONS] COMMAND [ARGS]...

Commandline tool to interact with a qtec camera.

Options:

--debug

--version Show the version and exit.

--help Show this message and exit.

Commands:

auto-exposure One-shot auto-exposure.

config Save/load/reset camera configuration.

exposure-sequence Create (and set) an exposure sequence.

save-image Save single/multiple/average frame(s).

stats View and export camera statistics (including...

Refer to the official docs for more details.

quarklib 5

A Python library with helper camera functions based on qamlib.

Includes functionality for:

- Saving/loading controls

- LUT interaction (FPGA based look-up tables)

- Saving PNM images

- Auto exposure

- Auto white-balance

Example:

import quarklib

device = "/dev/qtec/video0"

path = "config.json"

#to save all controls use 'controls = None'

controls = ["red gain", "green gain", " blue gain"]

#save settings to config file

quarklib.save(

path=path,

device=device,

controls=controls,

fmt=True,

crop=True,

framerate=True,

)

#load settings from config file

quarklib.load(

path=path,

device=device,

controls=True,

fmt=True,

crop=True,

framerate=True,

)

Refer to the official docs for more details or check the Examples section.

qamlib 6

A Python library for interacting with a V4L2 camera to get image data and control the camera.

Example:

import qamlib

cam = qamlib.Camera() # Opens /dev/qtec/video0

# Try to set exposure time (us)

cam.set_control("Exposure Time, Absolute", 1000)

exp = cam.get_control("Exposure Time, Absolute")

# Print the exposure time that we ended up with

print(f"Got exposure time {exp}us")

# Start and stop streaming with context manager

with cam:

meta, frame = cam.get_frame()

print(meta.sequence) # Frame number since start of streaming

Refer to the official docs for more details or check the Examples section.

gstreamer 7

Gstreamer is an open source multimedia framework.

Uses the concept of pipelines: src ! elem1 ! elem2 ! … ! sink

Has many plugins related to encoding/decoding and streaming. It is also possible to create your own plugins.

Examples of useful pipelines

v4l2src

Source element to capture video from v4l2 devices (like qtec cameras).

- set

num-buffers=Xin thev4l2srcin order to select how many frames should be captured. Otherwise it will capture until stopped.

gst-launch-1.0 v4l2src device=/dev/qtec/video0 num-buffers=10 ! fakesink

- set

selection=crop,left=<LEFT>,top=<TOP>,width=<WIDTH>,height=<HEIGHT>in order to adjust the cropping rectangle. Remember to also adjust the width and height in theCAPSafterwards.

gst-launch-1.0 v4l2src device=/dev/qtec/video0 selection=crop,left=64,top=387,width=2560,height=1800 ! video/x-raw, format=RGB, width=2560, height=1800, framerate=30/1 ! fakesink

- set

hw-center-crop=trueto auto-crop to the center of the sensor

gst-launch-1.0 v4l2src device=/dev/qtec/video0 hw-center-crop=true ! video/x-raw, format=RGB, width=100, height=100, framerate=30/1 ! fakesink

- set

extra-controls="c,<control_name>=<control_value>"in order to set v4l2 controls.

gst-launch-1.0 v4l2src device=/dev/qtec/video0 extra-controls="c,exposure_time_absolute=1000" ! fakesink

TCP Streaming

RAW

Must adjust the frame format (pixel format, width, height and fps) on the receiving side in order to "parse" the frames.

- Send:

gst-launch-1.0 v4l2src device=/dev/qtec/video0 ! video/x-raw, format=RGB, width=100, height=100, framerate=10/1 ! tcpserversink host=0.0.0.0 port=5000 --gst-debug=*:3

- Receive:

gst-launch-1.0 tcpclientsrc host=<cam_ip> port=5000 ! rawvideoparse width=100 height=100 framerate=10/1 format=rgb ! queue ! videoconvert ! queue ! ximagesink sync=0

- Receive with

vlc:

vlc --demux rawvideo --rawvid-chroma RV24 --rawvid-fps 20 --rawvid-width 1024 --rawvid-height 1024 tcp://<cam_ip>:5000

Possible --rawvid-chroma: RV24(RGB), GREY, I420, ...

https://wiki.videolan.org/Chroma/

JPEG

- Send:

gst-launch-1.0 v4l2src device=/dev/qtec/video0 ! video/x-raw, format=RGB, width=100, height=100, framerate=10/1 ! queue ! jpegenc ! queue ! tcpserversink host=0.0.0.0 port=5000 --gst-debug=*:3

- Receive:

gst-launch-1.0 tcpclientsrc host=<cam_ip> port=5000 ! jpegparse ! jpegdec ! queue ! videoconvert ! queue ! ximagesink sync=0

- Receive with

vlc:

vlc tcp://<cam_ip>:5000

UDP Streaming

RAW

Must adjust the frame format (pixel format, width, height and fps) on the receiving side in order to "parse" the frames.

- Send:

gst-launch-1.0 -v v4l2src device=/dev/qtec/video0 ! video/x-raw, format=BGRA, width=320, height=240, framerate=30/1 ! rtpvrawpay ! udpsink host="<target_ip>" port="5000" -v

- Receive:

gst-launch-1.0 udpsrc port="5000" caps = "application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)RAW, sampling=(string)BGRA, depth=(string)8, width=(string)320, height=(string)240, payload=(int)96, a-framerate=(string)30" ! rtpvrawdepay ! videoconvert ! queue ! xvimagesink sync=false

JPEG

- Send:

gst-launch-1.0 rtpbin name=rbin v4l2src device=/dev/qtec/video0 ! video/x-raw,framerate=10/1,format=RGB,width=1280,height=720 ! videoconvert ! timeoverlay ! jpegenc ! rtpjpegpay ! rbin.send_rtp_sink_0 rbin.send_rtp_src_0 ! udpsink port=5000 host=127.0.0.1 sync=0 rbin.send_rtcp_src_0 ! udpsink port=5001 host=127.0.0.1 sync=false async=false udpsrc port=5003 address=127.0.0.1 ! rbin.recv_rtcp_sink_0

- Receive:

gst-launch-1.0 udpsrc address=<cam_ip> port=5000 ! application/x-rtp, media=video, clock-rate=90000, encoding-name=JPEG, payload=26 ! rtpbin ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink sync=0

File Saving (RAW)

The IO of CFAST/USB pendrive is normally too slow for saving RAW files.

Therefore it is recommended to save to the RAM instead (/tmp), though it will have more limited space (4GB max, ~30secs in "normal" conditions).

Or use an SSD for longer recordings.

Multiple RAW files

Must adjust the frame format (pixel format, width, height and fps) when playing in order to "parse" the frames.

- Record:

GST_DEBUG=*:3 gst-launch-1.0 v4l2src device=/dev/qtec/video0 num-buffers=10 ! video/x-raw, format=RGB, width=100, height=100, framerate=10/1 ! multifilesink location=/tmp/test%d.raw -v

- Play:

GST_DEBUG=*:3 gst-launch-1.0 multifilesrc location=/tmp/test%d.raw ! videoparse format=rgb width=100, height=100, framerate=10/1 ! clocksync ! videoconvert ! ximagesink sync=0

Single RAW file

- Record:

GST_DEBUG=*:3 gst-launch-1.0 v4l2src device=/dev/qtec/video0 num-buffers=10 ! video/x-raw,format=RGB,width=100,height=100,framerate=10/1 ! filesink location=/tmp/test.raw

- Play:

gst-launch-1.0 filesrc location=/tmp/test.raw ! videoparse format=rgb width=100 height=100 framerate=10/1 ! clocksync ! videoconvert ! ximagesink sync=0

- Play with

vlc:

vlc --demux rawvideo --rawvid-chroma RV24 --rawvid-fps 10 --rawvid-width 100 --rawvid-height 100 test.raw

AVI container

So we don't need to use videoparse and adjust CAPS (frame format and size) as it gets saved into the container.

Note: avimux unfortunately only supports a small number of pixel formats: { YUY2, I420, BGR, BGRx, BGRA, GRAY8, UYVY, v210 }.

- Record:

GST_DEBUG=*:3 gst-launch-1.0 v4l2src device=/dev/qtec/video0 num-buffers=10 ! video/x-raw,format=BGR,width=100,height=100,framerate=10/1 ! avimux ! filesink location=/tmp/test.avi

- Play:

filesrc location=/tmp/test.avi ! avidemux ! clocksync ! videoconvert ! ximagesink sync=0

- Play with

vlc:

vlc test.avi

- Extract files:

gst-launch-1.0 filesrc location=/tmp/test.avi ! avidemux ! multifilesink location=/tmp/test%d.raw

or

gst-launch-1.0 filesrc location=/tmp/test.avi ! avidemux ! queue ! pnmenc ! multifilesink location=/tmp/test%d.pnm

Multiple PNM files

Supported pixel formats: { RGB, GRAY8, GRAY16_BE, GRAY16_LE }.

- Record:

GST_DEBUG=*:3 gst-launch-1.0 v4l2src device=/dev/qtec/video0 num-buffers=10 ! video/x-raw,format=RGB,width=100,height=100,framerate=10/1 ! queue ! pnmenc ! multifilesink location=/tmp/test%d.pnm -v

- Play:

GST_DEBUG=*:3 gst-launch-1.0 multifilesrc location=/tmp/test%d.ppm ! pnmdec ! videorate ! video/x-raw,framerate=10/1 ! clocksync ! videoconvert ! ximagesink sync=0

File Saving (JPEG)

JPEG in AVI container (MJPEG)

- Record:

GST_DEBUG=*:3 gst-launch-1.0 v4l2src io-mode=2 hw-center-crop=true device=/dev/qtec/video0 num-buffers=10 ! video/x-raw,format=RGB,width=100,height=100,framerate=10/1 ! queue leaky=2 max-size-bytes=0 max-size-time=0 max-size-buffers=5 ! videoconvert ! jpegenc ! avimux ! filesink location=/tmp/test_jpeg.avi

- Play:

GST_DEBUG=*:3 gst-launch-1.0 filesrc location=/tmp/test_jpeg.avi ! avidemux ! jpegdec ! clocksync ! videoconvert ! ximagesink sync=0

- Play with

vlc:

vlc test_jpeg.avi

- Extract files:

gst-launch-1.0 filesrc location=/tmp/test_jpeg.avi ! avidemux ! multifilesink location=/tmp/test%d.jpeg

gstreamer-python-utils 8

Collection of classes and examples, that can be used to speed up development of applications written in Python using Gstreamer as the base API.

It contains some base Gstreamer classes for building and managing pipelines. As well as some example applications using those classes.

These examples are helper applications that can be used to record and play image streams. Those streams can be used for project development.

from py_gst.pipeline import GstPipeline

from py_gst.pipeline import GstError

pipe = None

try:

pipe = GstPipeline("videotestsrc num-buffers=10 ! fakesink")

pipe.run()

while pipe.is_running():

time.sleep(1)

except GstError as err:

sys.stderr.write(f"{err}")

GstPipeline.cleanup(pipe)

return 1

from py_gst.appsink import AppSinkPipe

from py_gst.pipeline import GstError

pipe = None

try:

pipe = AppSinkPipe("videotestsrc num-buffers=10 ! appsink", True, 10)

pipe.run()

buffers = []

while pipe.is_running():

buffer = pipe.pop()

if buffer:

buffers.append(buffer)

print(f"Got: {len(buffers)} buffers")

except GstError as err:

sys.stderr.write(f"{err}")

GstPipeline.cleanup(gst_pipe)

return 1