Programming Guide

The userspace interface to the image sensors is provided through the Video4Linux API.

V4L2 API

Video4Linux (V4L) is a collection of device driver APIs and frameworks for video devices in Linux. It is used to handle real-time video capture, processing, and output. It's purpose is to provide an unified interface for video hardware (e.g. webcams, TV tuners, and video capture cards) in order to simplify the development of software that needs to interact with such video hardware. Video4Linux is currently at it's second version: Video4Linux2 (V4L2).

Video4Linux device drivers are responsible for creating V4L2 device nodes

(/dev/videoX) and tracking data from these nodes.

- IOCTL calls

- input/output control

- system call for device-specific input/output operations

- request code (device specific)

ioctl(fd, VIDIOC_G_FMT, arg);

ERRNOEINVAL,EBUSY, …

- ‘videodev2.h’

#define VIDIOC_G_FMT _IOWR('V', 4, struct v4l2_format)

Programming a V4L2 device consists of these steps:

- Opening the device (

/dev/qtec/video0) - Changing device properties, selecting a video and audio input, video standard, picture brightness a. o.

- Negotiating a data format (size, pixel format)

- Negotiating an input/output method (read, mmap, …)

- The actual input/output loop

- Closing the device

Multiple processes can open the video device simultaneously for changing controls or parameters.

However only a single process is allowed to read images from the video device at a time.

V4L2 Image Capture

- read

- just read from file (malloc memory for a single buffer)

- no metadata (timestamp, frame number, etc)

- mmap / (userptr)

- request N buffers:

VIDIOC_REQBUFS - query buffers (size, …):

VIDIOC_QUERYBUF- allocate memory:

mmap()

- allocate memory:

- queue buffers:

VIDIOC_QBUF - start stream:

VIDIOC_STREAMON- wait for buffer to be available:

select() - dequeue and re-queue buffers:

VIDIOC_DQBUF/VIDIOC_QBUF

- wait for buffer to be available:

- stop stream:

VIDIOC_STREAMOFF- deallocate memory:

munmap()

- deallocate memory:

- request N buffers:

Camera Settings

-

Required

- Image size: width x height

- Region of interest (cropping): left, top, width, height

- Pixel format: grey, RGB, 16-bit grey, ...

- bit mode: 8, 10 or 12-bit (important when using 16-bit grey)

- Framerate

- Exposure time: Motion blur

- Image size: width x height

-

Optional

- White balance (RGB gains): Proper colors.

- Analog/digital Gain

- Binning: Column/Row.

- LUT/Convolution Kernel

- Gain/Distortion Maps

Formats

- Bayer

- RGB:

- decimated (w/2 x h/2)

- interpolated (full size)

- grey (8/16-bit): decimated (w/2 x h/2)

- Bayer: raw bayer array (full size)

- RGB:

- Mono

- 8-bit

- 16-bit (LE / BE)

- 10 vs 12-bit mode

- shift up (4095 -> 65520): saturation!

- 10 vs 12-bit mode

Creating applications

The V4L2 API can be accessed natively through C/C++ (requires cross-compiling or building directly on the camera) or through Python bindings.

Python

- Doesn't require compilation

- No native V4L2 (requires libraries)

One option is to use OpenCV as it has a V4L2 backend for image capture and handles the V4L2 interaction automatically. However it has many limitations so it is not reccommended unless only very basic support is needed.

If more control is desired it is necessary to interact directly with V4L2.

There are some V4L2 python bindings publicy available (fx v4l2py), which are ctypes based.

They are very low-level, very close to C code, and provide direct IOCTL control.

They are all are missing some newer functionality (extended controls, selection, ...),

which is not difficult to add as it is simple ctypes conversion.

However it becomes a lot of boilerplate.

So it is not a reccommended method either.

In order to handle these issues Qtechnology has developed its own C++ based

python bindings (qamlib), which exposes a higher level

python interface, similar to OpenCV.

This is the reccomended method.

qamlib

For more details refer to the qamlib section.

import qamlib

cam = qamlib.Camera() # Opens /dev/qtec/video0

# Try to set exposure time (us)

cam.set_control("Exposure Time, Absolute", 1000)

exp = cam.get_control("Exposure Time, Absolute")

# Print the exposure time that we ended up with

print(f"Got exposure time {exp}us")

# Start and stop streaming with context manager

with cam:

meta, frame = cam.get_frame()

print(meta.sequence) # Frame number since start of streaming

Jupyter

Moreover the jupyter notebook can also be installed with: pip3 install jupyter.

Run it with:

jupyter notebook --allow-root --no-browser --port=8888 --ip=<cam_ip>- or

jupyter lab --allow-root --no-browser --port=8888 --ip=<cam_ip>

Then access access on your browser: http://<cam_ip>:8888 with the generated token.

Or use directly the URL provided by the camera: http://<cam_ip>:8888/?token=<token>.

Showing images or video

OpenCV's imshow() does not work very well under the Jupyter notebook,

and it opens a new window instead of using the notebook.

In order to show images or "video" directly inside the notebook use instead

matplotlib or IPython.display.

OpenCV example:

Opens a new window, does not display inside the notebook. Not recommended.

import qamlib

import cv2

cam = qamlib.Camera()

invert_colors = True

with cam:

while True:

meta,frame = cam.get_frame()

#OpenCV expects BGR instead of RGB

if invert_colors:

img = frame[:,:,::-1]

else:

img=frame

cv2.imshow('my picture', img)

k = cv2.waitKey(100)

if k == 27:

break

matplotlib example:

Requires apt-get install python3-matplotlib

Note: Installing matplotlib directly from pip might result in some un-resolved dependencies (SVG related).

Single image inside notebook

import qamlib

from matplotlib import pyplot as plt

cam = qamlib.Camera()

with cam:

meta,frame = cam.get_frame()

plt.imshow(frame)

plt.title('my picture')

plt.xticks([]), plt.yticks([]) # Hides the graph ticks and x / y axis

plt.show()

Live stream on a new window, requires pip3 install PyQt5

%matplotlib qt

import qamlib

from matplotlib import pyplot as plt

INTERVAL_SEC = 0.1

cam = qamlib.Camera()

with cam:

while True:

meta,frame = cam.get_frame()

plt.imshow(frame)

plt.title(f'FRAME {meta.sequence+1}')

plt.xticks([]), plt.yticks([]) # Hides the graph ticks and x / y axis

plt.draw()

plt.pause(INTERVAL_SEC)

plt.cla()

IPython.display example:

Live stream inside notebook

import qamlib

import cv2

from IPython.display import display, Image

display_handle=display(None, display_id=True)

cam = qamlib.Camera()

invert_colors = True

with cam:

while True:

meta,frame = cam.get_frame()

#OpenCV expects BGR instead of RGB

if invert_colors:

img = frame[:,:,::-1]

else:

img=frame

_, frame = cv2.imencode('.jpeg', img)

display_handle.update(Image(data=frame.tobytes()))

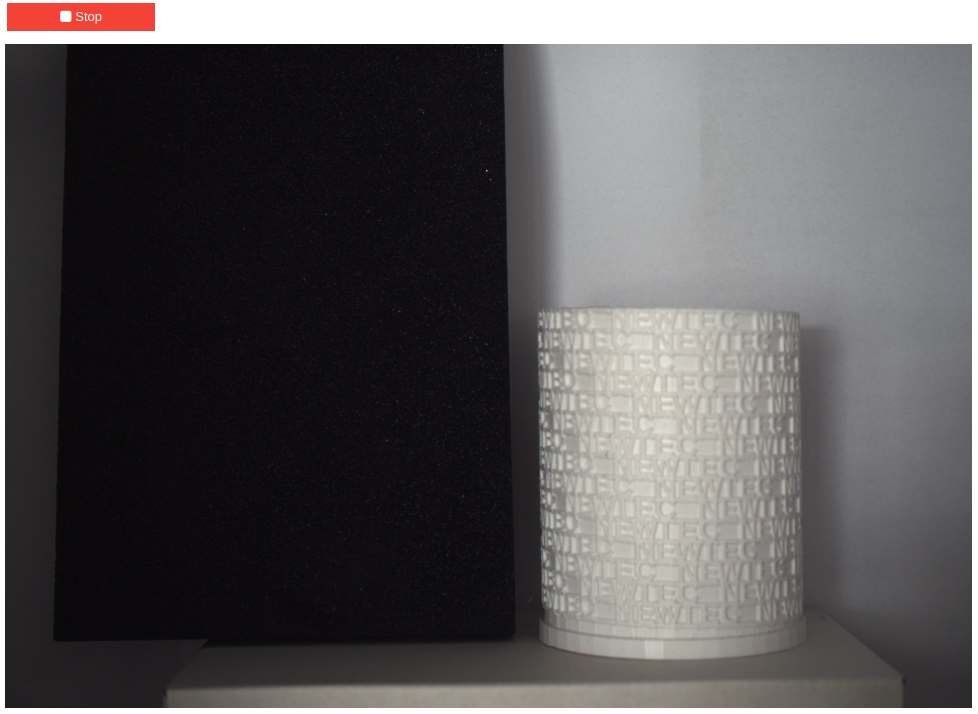

Add a stop button:

import qamlib

import matplotlib.pyplot as plt

import cv2

import numpy as np

from IPython.display import display, Image

import ipywidgets as widgets

import threading

# Stop button

# ================

stopButton = widgets.ToggleButton(

value=False,

description='Stop',

disabled=False,

button_style='danger', # 'success', 'info', 'warning', 'danger' or ''

tooltip='Description',

icon='square' # (FontAwesome names without the `fa-` prefix)

)

# Display function

# ================

def view(button):

cam = qamlib.Camera()

invert_colors = True

display_handle=display(None, display_id=True)

with cam:

while True:

meta,frame = cam.get_frame()

#OpenCV expects BGR instead of RGB

if invert_colors:

img = frame[:,:,::-1]

else:

img=frame

_, frame = cv2.imencode('.jpeg', img)

display_handle.update(Image(data=frame.tobytes()))

if stopButton.value==True:

display_handle.update(None)

break

# Run

# ================

display(stopButton)

thread = threading.Thread(target=view, args=(stopButton,))

thread.start()

OpenCV 1

OpenCV is an open source computer vision library.

It has a V4L2 backend for image capture. It is an easy way to get started as it handles the V4L2 interaction automatically. However it has many limitations so it is not reccommended unless only very basic support is needed.

- Limitations:

- no API for cropping

- no access to most V4L2 controls, only a small predefined subset.

- Work-around

Another limitation is that there is no control of how OpenCV handles V4L2 internally (mmap or read), there is no access to frame metadata (frame number and timestamp), handling of frame drops, etc.

Simple example:

import cv2

import time

import numpy as np

IMG_WIDTH = 1024

IMG_HEIGHT = 1024

FPS = 30

#PIXEL_FORMAT = cv2.VideoWriter.fourcc('Y','1','6',' ')

#PIXEL_FORMAT = cv2.VideoWriter.fourcc('R','G','B','3')

PIXEL_FORMAT = cv2.VideoWriter.fourcc('G','R','E','Y')

if __name__ == "__main__":

if len(sys.argv) > 1:

device = sys.argv[1]

else:

device = "/dev/qtec/video0"

#open video capture

stream = cv2.VideoCapture(device, cv2.CAP_V4L2)

if not stream.isOpened():

print("Error opening device")

exit(-1)

#set frame properties

stream.set(cv2.CAP_PROP_FRAME_WIDTH, IMG_WIDTH)

stream.set(cv2.CAP_PROP_FRAME_HEIGHT, IMG_HEIGHT)

stream.set(cv2.CAP_PROP_FPS, FPS)

stream.set(cv2.CAP_PROP_FOURCC, PIXEL_FORMAT)

#disable conversion to RGB on output image

stream.set(cv2.CAP_PROP_CONVERT_RGB, 0)

#set v4l2 controls available in OpenCV (only a small subset)

stream.set(cv2.CAP_PROP_EXPOSURE, 3000)

#stream.set(cv2.CAP_PROP_GAIN, 13100)

#set cvMat format?

#stream.set(cv2.CAP_PROP_FORMAT, -1)

#get frames

folder = "/tmp"

num=0

while True:

ret, frame = stream.read()

frame_time = time.time()

if not ret:

print("Error getting frame")

break

#buff = np.frombuffer(frame, np.uint16)

print(f"got frame time:{frame_time} size:{frame.shape}")

cv2.imwrite(f'{folder}/cap_{num}.pnm', frame)

num += 1

stream.release()

Adjusting v4l2 settings using v4l2-ctl system() calls:

import os

import sys

...

#set crop, requires setting image size and format to work

if os.system(f"v4l2-ctl -d /dev/qtec/video0 --set-fmt-video width={IMG_WIDTH},height={IMG_HEIGHT},pixelformat={PIXEL_FORMAT} --set-crop top={CROP_TOP},left={CROP_LEFT},width={IMG_WIDTH},height={IMG_HEIGHT}"):

print("Error setting image size and cropping")

exit(-1)

#set v4l2 controls using v4l2-ctl (any control)

ctrls = [

{"name":"exposure_time_absolute","value":10000},

{"name":"red_balance","value":16384},

{"name":"green_balance","value":16384},

{"name":"blue_balance","value":16384},

{"name":"sensor_bit_mode","value":SENSOR_BIT_MODE},

]

for ctrl in ctrls:

if os.system(f"v4l2-ctl -d {device} -c {ctrl['name']}={ctrl['value']}"):

print(f"Error setting {ctrl['name']}")

exit(-1)

...

gstreamer

gstreamer is an open source multimedia framework.

It also handles the interaction with V4L2 automatically. Good for fx encoding (PPM, TIFF, PNG, …) and file saving or streaming.

Uses the concept of pipelines: src ! elem1 ! elem2 ! … ! sink

Example pipelines:

gst-launch-1.0 \

v4l2src device=/dev/qtec/video0 extra-controls="c,sensor_bit_mode=1" \

num-buffers=100 selection=crop,left=100,top=200,width=800,height=600 ! \

video/x-raw, format=GREY16, width=800, height=600, framerate=10/1 ! \

multifilesink location=test%d.raw

gst-launch-1.0 \

v4l2src device=/dev/qtec/video0 extra-controls="c,sensor_bit_mode=1" \

selection=crop,left=100,top=200,width=800,height=600 ! \

video/x-raw, format=GREY16, width=800, height=600, framerate=10/1 ! fakesink

Requires appsink to get frames out to fx a python application: v4l2src device=/dev/qtec/video0 ! ... ! appsink.

See official gstreamer tutorial: short-cutting-the-pipeline for an example.

gstreamer-python-utils

Alternatively gstreamer-python-utils can be used to facilitate writing python applications interacting with gstreamer.

from py_gst.appsink import AppSinkPipe

from py_gst.pipeline import GstError

pipe = None

try:

pipe = AppSinkPipe("videotestsrc num-buffers=10 ! appsink", True, 10)

pipe.run()

buffers = []

while pipe.is_running():

buffer = pipe.pop()

if buffer:

#process buffer

buffers.append(buffer)

print(f"Got: {len(buffers)} buffers")

except GstError as err:

sys.stderr.write(f"{err}")

GstPipeline.cleanup(gst_pipe)

return 1

C/C++

- Native V4L2

- Cross-compile or build directly on camera (GCC)

Building directly on the camera

Install gcc on the camera: apt-get install gcc.

Yocto SDK: Cross Compiling

Yocto Project Application Development and the Extensible Software Development Kit (eSDK) manual. The manual explains how to use both the Yocto Project extensible and standard SDKs to develop applications and images.

The Yocto SDK allows to cross-compile applications for the qtec camera.

Standart Yocto SDK

Steps:

-

Download

-

Install

- Set execute permissions for the install script:

chmod 755 qtecos-glibc-x86_64-qtecos-image-znver1-qt5222-toolchain-<version>.sh - run the script:

./qtecos-glibc-x86_64-qtecos-image-znver1-qt5222-toolchain-<version>.sh- Note that this will install at default directory, but if you have multiple SDKs it is a good idea to change the directory.

- Set execute permissions for the install script:

-

Source the environment setup script

. /opt/poky-qtec/qt5222/<version>/environment-setup-znver1-poky-linux(adjust the path if necessary)- necessary every time a new terminal is opened in order to setup the environment for cross-compiling

-

Build the source code

- use

make,meson, etc. - Hello world! example

- use

-

scpbinary to camera -

Run application inside the camera

Extensible Yocto SDK

The main difference between the Standart and the Extensible SDKs seems to be that the Extensible SDK has devtool.

Moreover the Extensible SDK can also be updated and additional items can also be installed.

Steps:

-

Download

-

Install

- Set execute permissions for the install script:

chmod 755 qtecos-glibc-x86_64-qtecos-image-znver1-qt5222-toolchain-ext-<version>.sh - run the script:

./qtecos-glibc-x86_64-qtecos-image-znver1-qt5222-toolchain-ext-<version>.sh- Note that this will install at default directory, but if you have multiple SDKs it is a good idea to change the directory.

- Set execute permissions for the install script:

-

Source the environment setup script

. /home/<usr>/qtecos_sdk/qt5222/environment-setup-znver1-poky-linux(adjust the path if necessary)- necessary every time a new terminal is opened in order to setup the environment for cross-compiling

-

Build the source code

make,meson,devtool, etc.- Hello world! example

-

scpbinary to camera -

Run application inside the camera

devtool

The cornerstone of the extensible SDK is a command-line tool called devtool. This tool provides a number of features that help you build, test and package software within the extensible SDK, and optionally integrate it into an image built by the OpenEmbedded build system.

The devtool command line is organized similarly to Git in that it has a number

of sub-commands for each function.

You can run devtool --help to see all the commands.

devtool subcommands provide entry-points into development:

devtool add: Assists in adding new software to be built.devtool modify: Sets up an environment to enable you to modify the source of an existing component.devtool upgrade: Updates an existing recipe so that you can build it for an updated set of source files.

As with the build system, “recipes” represent software packages within devtool. When you use devtool add, a recipe is automatically created. When you use devtool modify, the specified existing recipe is used in order to determine where to get the source code and how to patch it. In both cases, an environment is set up so that when you build the recipe a source tree that is under your control is used in order to allow you to make changes to the source as desired. By default, new recipes and the source go into a “workspace” directory under the SDK.

See the devtool Quick Reference section in the Yocto Project Reference Manual or the eSDK devtool guide.

Quick how-to use devtool:

export DEVTOOL_WORKSPACE=/var/lib/yocto/workspace

devtool create-workspace $DEVTOOL_WORKSPACE

devtool modify <recipename>

devtool modify -n <recipename>

devtool build <recipename>

devtool deploy-target <recipename> <target>

devtool status

devtool reset <recipename>

Default workspace:

build/workspace/sources.

Workspace

devtool requires a workspace, it is possible to use the default one (under ``) or to create one

export DEVTOOL_WORKSPACE=/var/lib/yocto/workspace

devtool create-workspace $DEVTOOL_WORKSPACE

Then use devtool modify to setup the recipes to work on:

devtool modify <recipename>

or

devtool modify -n <recipename>

or

devtool modify -n <recipename> <path_to_src>

The first 2 options are for when the src code or the recipe is in the workspace. The last option is if you want to use src code outside the workspace.

Use devtool status to check the current status

devtool status

NOTE: Starting bitbake server...

NOTE: Started PRServer with DBfile: /home/msb/poky-qtec_sdk/qt5222/cache/prserv.sqlite3, Address: 127.0.0.1:36363, PID: 1954468

gstreamer1.0-plugins-qtec-core: /home/msb/.yp/shared/workdir/sources/gstreamer1.0-plugins-qtec-core

qtec-camera-gwt: /home/msb/.yp/shared/workdir/sources/qtec-camera-gwt

Now build and deploy to target for testing:

devtool build <recipename>

devtool deploy-target <recipename> root@<CAM_IP>

Note that you can also undeploy: devtool undeploy-target <recipename> root@<CAM_IP>

And lastly when development is done:

devtool update-recipe

Examples

- https://www.kernel.org/doc/html/latest/userspace-api/media/v4l/capture.c.html

- https://www.kernel.org/doc/html/latest/userspace-api/media/v4l/v4l2grab.c.html

Use #include <linux/qtec/qtec_video.h> instead of #include <linux/videodev2.h> for qtec formats.

Also use #include "libv4l2.h" instead of #include "../libv4l/include/libv4l2.h".

And remove the extra / from all //n.

Set Format

#include <linux/qtec/qtec_video.h>

fd = open(“/dev/qtec/video0”, O_RDWR | O_NONBLOCK, 0);

struct v4l2_format fmt;

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = 640;

fmt.fmt.pix.height = 480;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_GREY;

if (-1 == xioctl(fd, VIDIOC_S_FMT, &fmt))

errno_exit("VIDIOC_S_FMT");

close(fd);

Main Loop

while (true) {

for (;;) {

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select(fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r) {

if (EINTR == errno)

continue;

errno_exit("select");

}

if (0 == r) {

fprintf(stderr, "select timeout\n");

exit(EXIT_FAILURE);

}

if (read_frame())

break;

/* EAGAIN - continue select loop. */

}

read_frame() (read)

if (-1 == read(fd, buffers[0].start, buffers[0].length)) {

switch (errno) {

case EAGAIN:

return 0;

default:

errno_exit("read");

}

}

process_image(buffers[0].start, buffers[0].length);

return 1;

Memory Allocation (read)

buffers = calloc(1, sizeof(*buffers));

if (!buffers) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

buffers[0].length = buffer_size;

buffers[0].start = malloc(buffer_size);

if (!buffers[0].start) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

read_frame() (mmap)

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

default:

errno_exit("VIDIOC_DQBUF");

}

}

assert(buf.index < n_buffers);

process_image(buffers[buf.index].start, buf.bytesused);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

return 1;

Memory Allocation (mmap)

struct v4l2_requestbuffers req;

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_REQBUFS, &req))

errno_exit("VIDIOC_REQBUFS");

if (req.count < 2)

exit(EXIT_FAILURE);

buffers = calloc(req.count, sizeof(*buffers));

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

struct v4l2_buffer buf;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if (-1 == xioctl(fd, VIDIOC_QUERYBUF, &buf))

errno_exit("VIDIOC_QUERYBUF");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start = mmap(NULL, buf.length, PROT_READ | PROT_WRITE, MAP_SHARED, fd, buf.m.offset);

if (MAP_FAILED == buffers[n_buffers].start)

errno_exit("mmap");

}

Start Capture (mmap)

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMON, &type))

errno_exit("VIDIOC_STREAMON");

break;